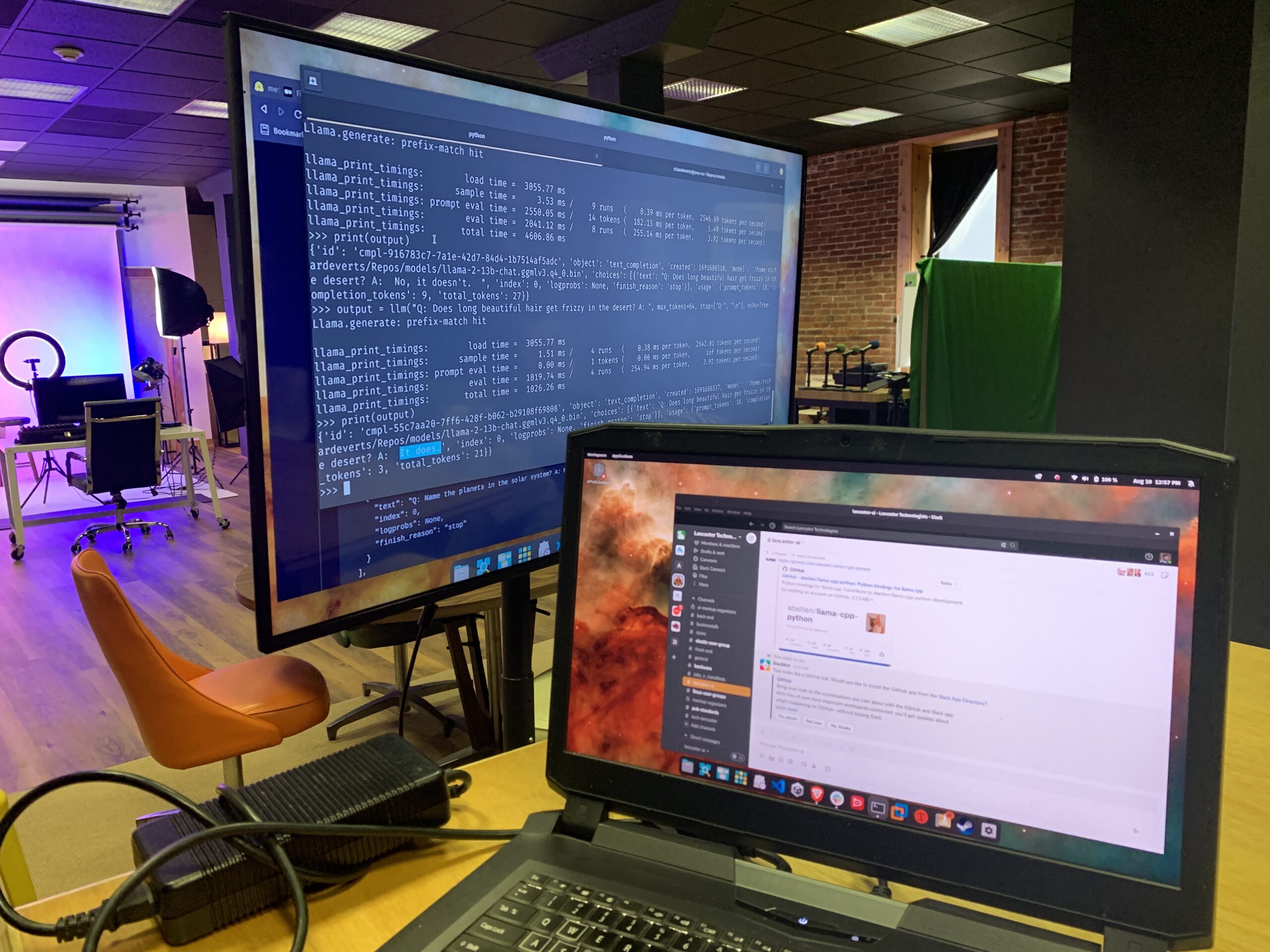

Great Lancaster AI event on working locally with Llama.cpp and its Python wrappers to get things going for Llama 2 on our laptops! Awesome questions on embeddings, installations, and Burning Man!

The basic steps were pretty easy.

- Install the Python wrapper for the appropriate operating system.

- Download the GGML model you want from Hugging Face. We used this one for the demo today.

- Profit!

Special thanks to everyone who came out to work through it and look forward to seeing everyone shortly!